Image colorization has been attracting the research interests of the community for decades. However, existing methods still struggle to provide satisfactory colorized results given grayscale images due to a lack of human-like global understanding of colors. Recently, large-scale Text-to-Image (T2I) models have been exploited to transfer the semantic information from the text prompts to the image domain, where text provides a global control for semantic objects in the image. In this work, we introduce a colorization model piggybacking on the existing powerful T2I diffusion model. Our key idea is to exploit the color prior knowledge in the pre-trained T2I diffusion model for realistic and diverse colorization. A diffusion guider is designed to incorporate the pre-trained weights of the latent diffusion model to output a latent color prior that conforms to the visual semantics of the grayscale input. A lightness-aware VQVAE will then generate the colorized result with pixel-perfect alignment to the given grayscale image. Our model can also achieve conditional colorization with additional inputs (e.g. user hints and texts). Extensive experiments show that our method achieves state-of-the-art performance in terms of perceptual quality.

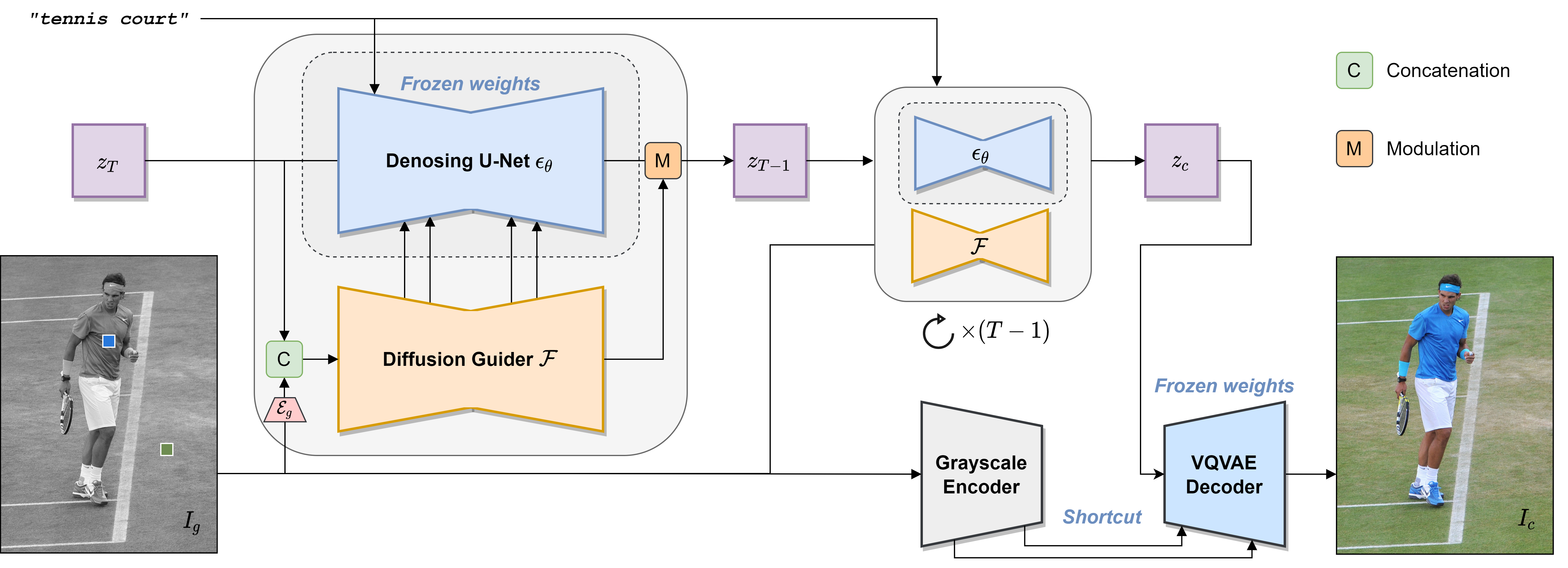

Method overview. Our framework consists of two components: a latent diffusion guider model and a lightness-aware VQVAE model. When the grayscale image \(I_g\) and the optional textual descriptions \(t\) or hint points \(\{h\}\), then the latent diffusion guider model guides the pretrained Stable Diffusion model to generate a "colorized" latent \(z_c\) through the denoising diffusion process. The lightness-aware VQVAE model then uses \(z_c\) as the latent color prior and incorporates the grayscale information of \(I_g\) to produce a pixel-aligned colorization \(I_c\).

@article{liu2023piggybackcolor,

title={Improved Diffusion-based Image Colorization via Piggybacked Models},

author={Hanyuan Liu and Jinbo Xing and Minshan Xie and Chengze Li and Tien-Tsin Wong},

year={2023},

eprint={2304.11105},

archivePrefix={arXiv},

primaryClass={cs.CV}

}